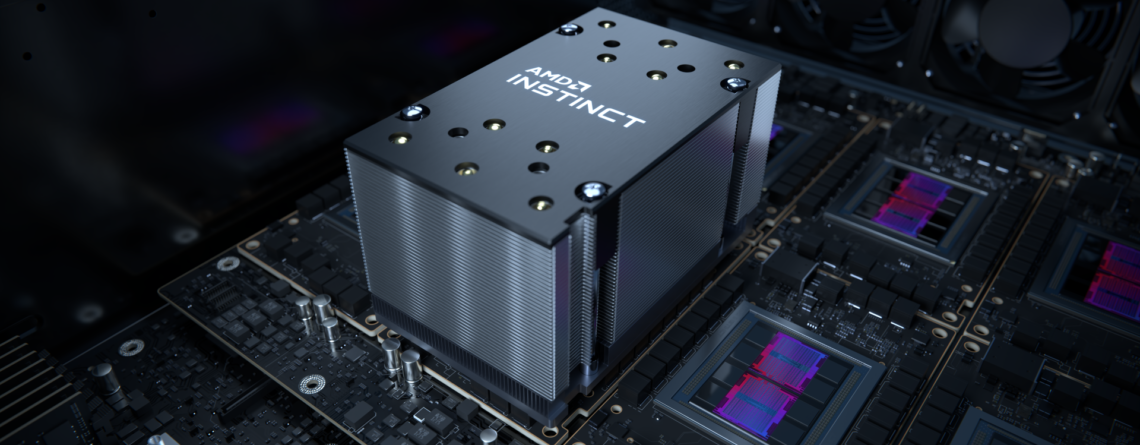

AMD Instinct™ MI200 accelerators

The AMD Instinct™ MI200 series accelerators are AMD’s newest data center GPUs, designed to power discoveries in Exascale systems, enabling scientists to tackle our most pressing challenges from climate change to vaccine research. With MI200 accelerators and ROCm™ 5.0 software ecosystem, innovators can tap the power of the world’s most powerful HPC and AI datacenter GPUs to accelerate their time to science and discovery.

World’s Fastest HPC & AI Accelerator

Powered by the 2nd Gen AMD CDNA™ architecture, AMD Instinct™ MI200 accelerators deliver a quantum leap in HPC and AI performance over existing data center GPUs today. With a dramatic 4x advantage in HPC performance compared to existing data center GPUs, the MI200 accelerator delivers outstanding performance for a broad set of HPC applications. The MI200 accelerator is built to accelerate deep learning training, with the MI250X surpassing 380 teraflops peak theoretical FP16 performance, and offering users a powerful platform to fuel the convergence of HPC and AI.

- 2nd Gen AMD CDNA™ architecture purpose-built to drive discoveries at Exascale for HPC and AI workloads

- World’s fastest accelerator, the AMD Instinct MI250X, delivers up to 47.9 TFLOPs peak theoretical double precision (FP64) HPC performance and up to 383 TFLOPS peak theoretical half-precision (FP16) AI performance1

- 2nd Gen Matrix Core Technology enables the AMD Instinct MI250X to deliver up to 95.7 TFLOPS peak theoretical double precision (FP64 Matrix) performance

- With 128GB high speed HBM2e memory, the AMD Instinct MI250 and 250X deliver an industry leading 3.2 TB/s peak theoretical memory bandwidth supporting the most data intensive workloads

- Engineered as multi-chip GPU packaging to deliver leading performance efficiency and memory throughput

Innovations Delivering Performance Leadership

AMD innovations in architecture, packaging and integration are pushing the boundaries of computing by unifying the most important processors in the data center, the CPU and the GPU accelerator. With Industry-first multi-chip GPU modules along with 3rd Gen AMD Infinity Architecture, AMD is delivering performance, efficiency and overall system throughput for HPC and AI using AMD EPYC™ CPUs and AMD Instinct™ MI200 series accelerators.

- AMD Instinct™ MI200 series accelerators powered by 2nd Gen AMD CDNA™ architecture, are built on an innovative multi-chip design to maximize throughput and power efficiency for the most demanding HPC and AI workloads

- With AMD CDNA™ 2, the MI250 has new Matrix Cores delivering up to 7.8X the peak theoretical FP64 performance vs. AMD previous Gen GPUs and offers the industry’s best aggregate peak theoretical memory bandwidth at 3.2 terabytes per second

- 3rd Gen AMD Infinity Fabric™ technology enables direct CPU to GPU connectivity extending cache coherency and allowing a quick and simple on-ramp for CPU codes to tap the power of accelerators.

- AMD Instinct MI250 accelerators with advanced GPU peer-to-peer I/O connectivity through eight AMD Infinity Fabric™ links deliver up to 800 GB/s of total aggregate theoretical bandwidth.

- The Frontier supercomputer, one of the first Exascale supercomputer, is the first to offer a unified compute architecture powered by AMD Infinity Platform™ based nodes.

Ecosystem without Borders

AMD ROCm™ is an open software platform allowing researchers to tap the power of AMD Instinct™ accelerators to drive scientific discoveries. The ROCm platform is built on the foundation of open portability, supporting environments across multiple accelerator vendors and architectures. With ROCm 5.0, AMD extends its open platform powering top HPC and AI applications with AMD Instinct MI200 series accelerators, increasing accessibility of ROCm for developers and delivering leadership performance across key workloads.

- AMD ROCm™ – Open software platform for science used worldwide on leading exascale and supercomputing systems

- ROCm™ 5.0 extends AMD’s open platform for HPC and AI with optimized compilers, libraries and runtimes adding support for MI200

- Open & Portable – ROCm™ open ecosystem supports heterogenous environments with multiple GPU vendors and architectures

- ROCm™ 5.0 library optimizations using new MI200 features: FP64 Matrix ops, reduced kernel launch overhead, Packed FP32 math and FP64 atomics support

- ROCm™ 5.0 extends access of AMD accelerators from the data center to the desktop, enabling workstation ROCm support with Radeon™ Pro W6800 GPUs.

- Through the AMD Infinity Hub, researchers, data scientists and end-users can easily find, download and install containerized HPC apps and ML frameworks that are optimized and supported on AMD Instinct™ MI200 and ROCm™.